What Are AI Agents? Architecture, Components, and Workflows

Table of Contents

For years, we’ve been fascinated by AI that can answer any question, but the focus is shifting from chatbots that simply ‘know’ things to AI agents that can ‘do’ things. Unlike traditional chatbots, autonomous systems handle real-world challenges on your behalf. For example, not just writing your travel itinerary, but also logging into your browser, comparing flight prices, negotiating a refund, and updating your calendar. This automation saves you time and simplifies complex processes, making your daily life easier and more efficient.

Why LLMs Alone Are Insufficient for Autonomous Systems

However, Large Language Models (LLMs) alone are not sufficient for building autonomous systems that do more than just answer your customers’ queries. On their own, they respond to prompts but lack the ability to make independent decisions or take actions beyond generating text. CEOs and other executives are looking for AI use cases that will:

- Decide what to do next without constant human input.

- Gather and interpret data from multiple sources.

- Adapt their behavior based on outcomes and feedback.

- Coordinate actions across tools, systems, and even human resources.

This is where AI agents address business needs by reasoning, planning, acting, observing outcomes, and adjusting behavior through predefined feedback mechanisms to drive business outcomes.

Emergence of Agentic Systems in Modern AI Platforms

Modern AI platforms are supporting agentic AI systems, in which one or more AI agents collaborate to complete complex tasks. In these systems, multiple specialized agents can collaborate, exchange data, and divide responsibilities.

For example, if one AI agent focuses on information retrieval, another on decision-making, and another on execution. An orchestrator agent coordinates these specialist agents to achieve a broader objective.

This multi-agent approach allows organizations to automate complex workflows that would be difficult to manage with a single monolithic model. By collaborating, individual autonomous systems can handle specialized tasks, communicate, and adapt, resulting in improved efficiency, scalability, and responsiveness in powering end-to-end business processes, customer support systems, logistics optimization, cybersecurity monitoring, and more.

To understand how these agents function, let’s dive deeper into the core concepts and architecture of autonomous systems.

What Are AI Agents?

An AI agent is a system that can interact with its environment, collect data, and use that data to autonomously perform self-directed tasks to achieve predetermined goals. While humans define the goals, the agent independently determines the best actions needed to reach them. These actions may include asking questions, retrieving information from internal or external systems, invoking APIs, or collaborating with other AI agents or humans.

AI Agent Architecture

The AI agent architecture describes how an autonomous system operates at runtime as a system rather than as a static model. Unlike traditional automation or standalone LLM-based applications, agentic systems are designed to continuously perceive their environment, reason over context, take actions, and adjust behavior over time. Three elements needed to build successful AI agents:

- A model or framework (i.e., LLM) that allows the AI agent to develop reasoned solutions and/or arrive at decisions.

- Tools that provide the AI agent with access to techniques, services, or other external methods (for example, APIs) to enable the agent to perform the desired actions.

- Memory allows the agent to retain short-term context and long-term knowledge via vector storage. This enables the agent to learn from prior experiences and reflect on past actions, adding depth to its problem-solving.

- Specific guidance (e.g., constraints) defining how the AI agent behaves.

Together, these elements form the execution core of an agentic system and define how autonomous systems work in real-world environments. Rather than following fixed rules, agents rely on contextual reasoning to handle ambiguity, unstructured data, and exceptions, making them suitable for workflows that resist traditional automation.

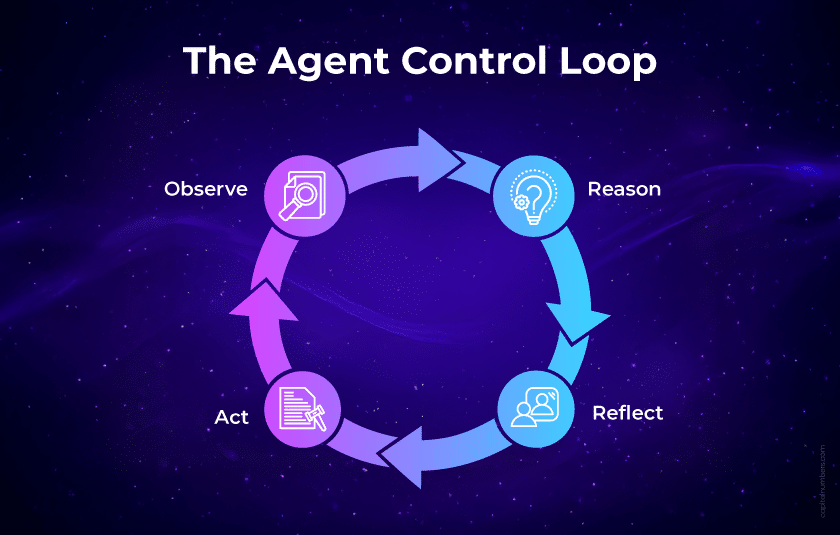

The Agent Control Loop

At runtime, AI agents operate through a control loop that governs perception, reasoning, action, and adaptation. This loop is central to understanding AI agents, which are explained as autonomous systems.

Observe

The agent begins by perceiving its environment. Inputs may include user queries, system logs, structured API responses, documents, or sensor data. This perception phase involves ingesting, cleaning, and structuring raw data for effective use. Techniques such as natural language processing, entity recognition, anomaly detection, or data extraction are often applied at this stage.

Reason

After becoming familiar with the inputs, the agent will identify a range of possible actions. There are several methods an agent can apply, including logical rules, probabilistic models, heuristics, and deep learning algorithms. Many modern agents use reasoning paradigms to reason about context, the level of uncertainty about the next step, and which action to take among available options. By reasoning about contexts in multiple ways, an agent can approach the solution in many different ways and develop a more nuanced view of solutions than Traditional RPA.

Act

Based on its reasoning and planning, the agent executes actions. These actions may include calling APIs, querying databases, triggering workflows, sending messages, or interacting with external systems. Tool calling enables the agent to extend its capabilities beyond its internal knowledge, allowing it to retrieve real-time information or perform operations in external environments.

Reflect

After acting, the agent observes the outcome and updates its internal state. Reflection allows the agent to assess whether its actions moved it closer to its goal. Feedback, explicit or implicit, can inform future decisions, making reflection a critical part of continuous improvement and learning.

This loop directly connects to the components that make up an AI agent, as each phase relies on specific components such as perception modules, reasoning engines, memory, and tool interfaces.

Event-Driven vs. Task-Driven Agents

The agentic workflow can also differ based on how execution is triggered:

Task-driven agents

These agents complete a defined objective by ingesting inputs, planning actions, executing steps, and stopping when the objective is complete. This model fits clearly scoped business processes.

Event-driven agents

These agents respond to events such as system changes or alerts, continuously observing their environment and acting when specific conditions are met.

Both approaches rely on the same underlying control loop but differ in how and when it is activated. Understanding this distinction is essential for designing an agentic workflow step by step that fits individual business requirements.

Reasoning Inside AI Agents: Chain of Thought Prompting

Within the AI agent architecture, reasoning is the mechanism that connects perception to action. While the agent control loop describes when reasoning happens, chain of thought (CoT) prompting explains how reasoning unfolds inside language-model-driven agents, particularly for complex, multistep tasks.

Chain of thought prompting is a prompt engineering technique that encourages language models to generate intermediate reasoning steps rather than jumping directly to a final answer. This makes it especially relevant for autonomous systems that must plan, evaluate alternatives, and make decisions across multiple steps within their workflow.

What Is Chain of Thought (CoT) Prompting?

Chain of thought prompting guides a model to “think out loud” by producing a structured sequence of steps that lead to the conclusion. Instead of returning a single response, the model articulates how it arrived at that response.

This technique was introduced to improve performance on tasks involving arithmetic, symbolic, and common-sense reasoning. Prompting models that generate intermediate reasoning steps significantly improves accuracy on multistep problems.

Why Chain of Thought Matters for AI Agents

The decision-making process of autonomous systems relies on incomplete knowledge that changes over time. CoT prompting enhances the ‘Reason’ part of an AI agent’s loop by providing a structured way to determine what to do next from available choices. Chain of thought prompting assists an AI agent in its decision-making execution in the following ways:

- By providing a framework for breaking complex problems into easy-to-understand steps.

- By presenting a means to visualize and check out the logic behind a thought.

- By restricting the ability of autonomous systems to give inaccurate answers with high confidence.

- By supporting the development of potential outcomes for each type of decision made.

For example, instead of selecting an action that merely sounds plausible, an AI agent using CoT can reason through constraints, dependencies, and outcomes before acting. This is critical when designing AI agent workflows step by step, where each decision influences downstream actions.

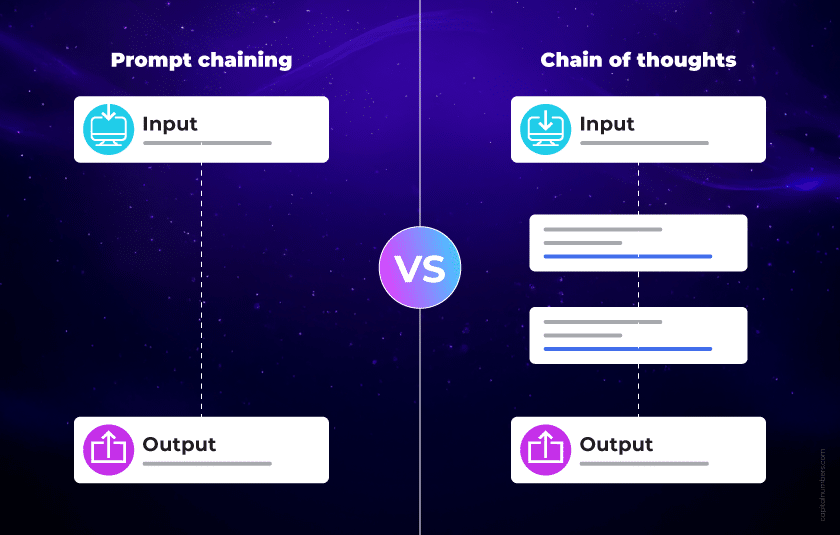

| Aspect | Chain of Thought (CoT) Prompting | Prompt Chaining |

|---|---|---|

| What is it? | Elicits reasoning steps within a single prompt. | Breaks a task into multiple prompts executed sequentially. |

| How it works | The model generates intermediate reasoning internally before producing an answer. | Each prompt uses the output of the previous prompt as its input. |

| Prompt structure | Single prompt with instructions to explain reasoning step by step. | Multiple prompts linked together in a sequence. |

| Primary purpose | Improve reasoning clarity and multistep problem-solving. | Breaks down complex tasks into smaller, manageable steps. |

Variants of Chain of Thought Prompting

Chain of thought prompting has evolved into multiple variants to support different agent scenarios:

- Zero-shot chain of thought, where models reason without task-specific examples.

- Automatic chain of thought, which reduces manual prompt design by generating reasoning paths automatically.

- Multimodal chain of thought, which integrates text with visual inputs such as images or UI elements.

These variants are particularly relevant as AI agents expand beyond text-only environments and begin operating across multimodal workflows.

Role of Chain of Thought in Modern AI Agents

Chain-of-thought prompting does not replace agent architecture, tools, or models. Instead, it strengthens the reasoning layer that connects perception to action. In modern AI agents, CoT works alongside function calling, retrieval, and model orchestration that helps businesses make data-driven decisions.

As AI agents continue to evolve toward greater autonomy, transparent and structured reasoning mechanisms, such as chain-of-thought prompting, play a primary role in making agent behavior understandable and trustworthy.

Importance of LAMs, Small Language Models and Tools in AI Agent Architecture

Large Language Models alone are not sufficient to support action-oriented, real-world agent behavior. This gap has led to the emergence of Large Action Models (LAMs) and Small Language Models (SLMs), and to a stronger emphasis on function calling, retrieval, and tools within the AI agent workflow.

Large Action Models (LAMs): Enabling Action-Centric AI Agents

Much as Large Language Models transformed natural language processing, Large Action Models (LAMs) are designed to transform how AI agents interact with their environments. While LLMs excel at language generation and reasoning, LAMs focus on turning intent into easy-to-execute actions.

LAMs are built to go beyond text generation. One of their characteristics is providing strong support for function calling, which allows AI agents to translate user intent into structured parameters that can trigger external processes. While LAMs focus on function calling, the true power of LAMs (like those used in web agents) lies in their ability to navigate GUIs (Graphical User Interfaces) when an API doesn’t exist. This capability is a game-changer when integrating with legacy systems that may not have exposed APIs but still require automation.

Model Orchestration and the Role of Small Language Models

While LAMs provide the action layer, model orchestration enables AI agents to combine multiple models, especially Small Language Models (SLMs), to efficiently handle specialized tasks.

Instead of relying exclusively on large, resource-intensive models, agents can orchestrate smaller models for:

- Summarizing data.

- Parsing user input.

- Extracting structured information.

- Providing insights based on historical context.

SLMs are particularly valuable during development and testing, as they can be run locally and offline. This makes them an important part of autonomous systems, especially when cost, latency, or deployment constraints are a concern.

While SLMs already handle language understanding and generation effectively, RAG equips them with external knowledge access, improving factual grounding and task performance without fully matching the capabilities of larger models. This makes RAG a key enabler in the design of scalable AI agent architectures.

Tools, Pipelines, and Human-in-the-Loop Integration

Tools are the mechanisms through which AI agents interact with the world. In practice, tools function as pipelines that move data between stages of agentic workflows, transforming inputs into actions and outcomes.

Tools can include:

- APIs and automation scripts.

- Data processing pipelines.

- External services.

- Humans-in-the-loop.

Why LAMs, SLMs, and Tools Matter for AI Agents

As autonomous systems evolve beyond being just language-generating machines, so do their capabilities for reasoning, execution, information retrieval, and integration into the real world.

LAMs focus on action-based execution; SLMs provide an increase in the effectiveness of specialization; RAG gives you the ability to perform based on information; and tools create real-world integration for autonomous systems.

All of these components together form the foundation for an extensible, scalable architecture for AI agents.

Conclusion

AI agents are autonomous systems that reason, act, and adapt their behavior in real time based on context and feedback. They go beyond the traditional limitations of Large Language Models (LLMs) by combining various modelling and procedural approaches to deliver complete, intelligent, and actionable solutions.

For business leaders, autonomous systems help them streamline and automate complex, multistep workflows, monitor dynamic environments, and scale operational efficiency without sacrificing quality or oversight. By orchestrating multiple models and tools, companies can deploy AI agents that not only respond intelligently but also continuously learn, adapt, and optimize outcomes, unlocking business success across customer service, logistics, finance, cybersecurity, and other sectors of this dynamic market.