Hiring for AI? 5 Signs Your “Python Developer” Isn’t Ready for Production

Table of Contents

Hiring Python developers for AI projects is not just a technical decision; it is a business-critical one. The right Python AI team can help you turn data into insights, automate decisions, and create long-term competitive advantage. The wrong hire can delay outcomes, increase costs, and reduce AI ROI.

Many CXOs assume that strong Python skills alone are enough to deliver AI success. In practice, AI projects require problem framing, data readiness, model evaluation, and scalability planning across business and engineering teams. Small AI hiring mistakes often show up later as missed timelines, unclear results, or solutions that cannot scale.

This blog highlights five common mistakes leaders make when hiring Python developers for AI initiatives. More importantly, it explains how to avoid them so your investment in AI delivers measurable business value, not just working code.

Top Mistakes to Avoid When Hiring Python Developers for AI Initiatives

Before you hire Python developers for AI projects, remember: the role definition you choose shapes delivery quality and long-term scalability. The right hire strengthens AI outcomes, model reliability, and cost control. Here are the top hiring mistakes to avoid:

Mistake 1. Hiring Python Developers When You Need Python AI Developers or AI/ML Engineers

The Trap

This is the common mistake: treating Python development and AI development as the same skill set.

The Reality

A strong Python developer may ship reliable software.

But AI in production is a different problem. It’s less about code elegance and more about how models behave in the real world, under cost pressure and user expectations.

The Skill Gap (Python Developer vs AI Engineer)

| Standard Python Developer | AI Engineer / Python AI Developer |

|---|---|

| API development (FastAPI, Flask, Django) | Model-backed APIs (inference + safeguards) |

| Backend business logic | Feature engineering from messy data |

| Integrations & automation scripts | ML pipelines (training, evaluation, retraining) |

| Data pipelines & ETL workflows | Model evaluation against real-world targets |

| CRUD apps, web services | RAG pipelines, vector databases |

| Focus on correctness | Balances accuracy, latency, cost, and reliability |

| Manual fixes when things break | Monitoring, drift detection, rollback planning |

This is where the difference becomes real: one builds software workflows, the other owns AI behavior in production.

The Fix

Fix the role definition before you interview and hire Python developers for AI projects. Decide what you’re building and hire to that output.

Use this quick mapping:

- If you need APIs, pipelines, and integrations → Hire Python developers

- f you need ML features (recommendations, scoring, classification) → Go for Python AI developers

- If you need deployment, monitoring, and cost controls → Hire AI/ML engineers

If your project includes GenAI (LLMs), RAG pipelines, or AI agents, you need stronger production and security readiness. You’re not only building a model – you’re building an AI system that must protect data, control costs, and handle prompt-injection risks safely.

You also need an evaluation plan that matches real user behavior. A strong candidate should explain how they measure and improve GenAI quality in production, including:

- Groundedness/citation accuracy: Is the answer supported by the retrieved sources?

- Hallucination rate: How often does it invent or make unsupported claims?

- Retrieval quality: Are we pulling the right documents (precision/recall, hit rate)?

- Cost per request and token controls: How do they keep usage and spend predictable?

- Latency budgets: How do they meet response-time targets for copilots and chat flows?

If they focus only on prompts and model choice, ask for evidence of metrics, monitoring, and iteration in production.

Quick interview check (role fit):

Ask: “What parts of the AI lifecycle have you owned end-to-end?”

If the answer is limited to notebooks only, the role is likely mismatched.

Mistake 2. Screening for Framework Names Instead of Production AI Skills

The Trap

Many hiring loops for Python developers for AI projects are framework-driven:

- “Do they know TensorFlow?”

- “Do they know PyTorch?”

- “Have they used Hugging Face?”

The Reality

Framework knowledge is useful, but it does not guarantee delivery. In AI projects, the biggest risks are data quality, evaluation methods, and production constraints.

Most AI failures come from:

- Poor data handling

- Wrong metrics

- Ignored failure modes

- Uncontrolled cost

Strong Python AI developers understand trade-offs, not just libraries.

The Fix

Hire for delivery skills, not tool familiarity.

Look for strength in:

- Data handling: validation, leakage prevention, reproducibility

- Evaluation: metrics that match business outcomes

- Failure thinking: drift, edge cases, brittle features

- Cost awareness: token limits, caching, usage controls

Interview questions that work:

- “Which metric mattered most—and why?”

- “When did your model underperform in production?”

- “How did you control inference cost?”

- “What data issue slowed you down the most?”

Hiring filter:

Ask for an example where results improved by changing data or evaluation, not by switching frameworks.

Mistake 3. Accepting Demo Results Instead of Verifying Production AI Experience

The Trap

Judging candidates by clean demos and notebooks.

The Reality

A strong demo can hide weak delivery.

- Demo AI: Clean datasets, one-time notebook runs, controlled assumptions

- Production AI: Messy inputs, monitoring, retraining/iteration, and safe failure handling.

A strong portfolio helps, but it’s not proof of production deployment experience. Kaggle medals or popular GitHub repositories can show talent, but they don’t guarantee the person has shipped Python for AI into production.

For real outcomes, hire Python AI developers who have run models in production, where inputs are unpredictable, users behave inconsistently, and performance degrades over time.

The Fix

Hire developers who have operated AI after launch.

Real production experience includes:

- Input validation and fallback logic

- Model versioning and rollback

- Monitoring (quality, latency, cost)

- Alert thresholds

- Post-launch iteration

Key interview questions:

- What broke after deployment?

- What did you monitor in the first month?

- What was your rollback plan?

Fast proof step (critical):

“Model quality dropped in week 3. What do you check first—and what do you do next?”

Strong candidates answer step-by-step. Weak ones stay theoretical.

Mistake 4. Ignoring AI Security, Governance, and Risk Until After Launch

The Trap.

Treating AI security and cost as “post-launch problems.”

The Reality

With LLMs, this becomes expensive very fast.

Common LLM security risks include:

- Prompt injection: A user tricks the model into ignoring your rules and following malicious instructions.

- Data leakage: Sensitive information (PII, internal data) gets exposed through prompts, logs, or responses.

- Tool/agent permission control: If the model can trigger tools (email, database queries, ticket updates), you must control what it is allowed to do and what it is never allowed to do.

- Output filtering and safe fallbacks: Sometimes the model will be wrong or unsafe. You need filters and a fallback path (human review, “I don’t know,” or a safe default action).

- Cost governance (often ignored until it’s too late)

Without controls, LLM usage can spike unpredictably – long prompts, retries, agent loops, or abuse can quickly lead to runaway API bills. - Explainability and bias risk

If AI influences approvals, pricing, or risk decisions, black-box outputs increase regulatory, legal, and reputational exposure.

The Fix

Build a simple “AI safety checklist” into hiring and planning:

- What data is allowed to be used, and what is blocked?

- How do you prevent prompt injection from overriding system rules?

- What is logged, what is masked, and how long is it kept?

- If the model can use tools, what permissions does it have (and what is restricted)?

- How are API costs controlled? (Token limits, rate limits, budget caps, caching, usage alerts)

- What happens when the AI is unsure or the request looks risky? (safe fallback rules)

- How do you test failure cases before launch? (bad inputs, edge cases, adversarial prompts)

Hiring move:

Ask candidates how they handle prompt injection, sensitive data, tool permissions, and safe fallbacks in real systems. You don’t need deep security jargon. You need clear boundaries and habits.

Mistake 5. Hiring Without a Clear Scope, Success Metrics, and an AI Delivery Model

The Trap

Hiring before defining success.

The Reality

This is a common AI hiring issue because teams can build features without a clear adoption path. Teams may build something promising, but it won’t be adopted, trusted, or maintained without ownership and metrics. That’s how AI budgets lose momentum and ROI.

What usually goes wrong

- The goal is vague

(“Make it smarter”) instead of measurable

(“Reduce handling time by 20%”) - Data reality appears late

Access approvals, PII rules, or missing datasets slow or block progress after hiring - No delivery or ownership model

The system ships, but no one owns monitoring, cost control, fixes, or iteration

The Fix

Before hiring, align on just three things:

- Business goal

What workflow or decision will AI improve—and how will the business measure success? - Data readiness

Do the required data sources exist, are they approved for use, and are privacy rules clear? - Success criteria

What tells you this is working: business impact, AI quality, adoption, and cost limits?

If you can’t answer these clearly, you’re hiring into ambiguity, and even strong engineers will struggle.

Hiring move:

“Deliver an AI feature that improves X KPI, runs within cost limits, and is owned after launch,” not “Must know LangChain, PyTorch, and vector databases.”

This one change alone prevents months of wasted effort and helps the right candidates self-select.

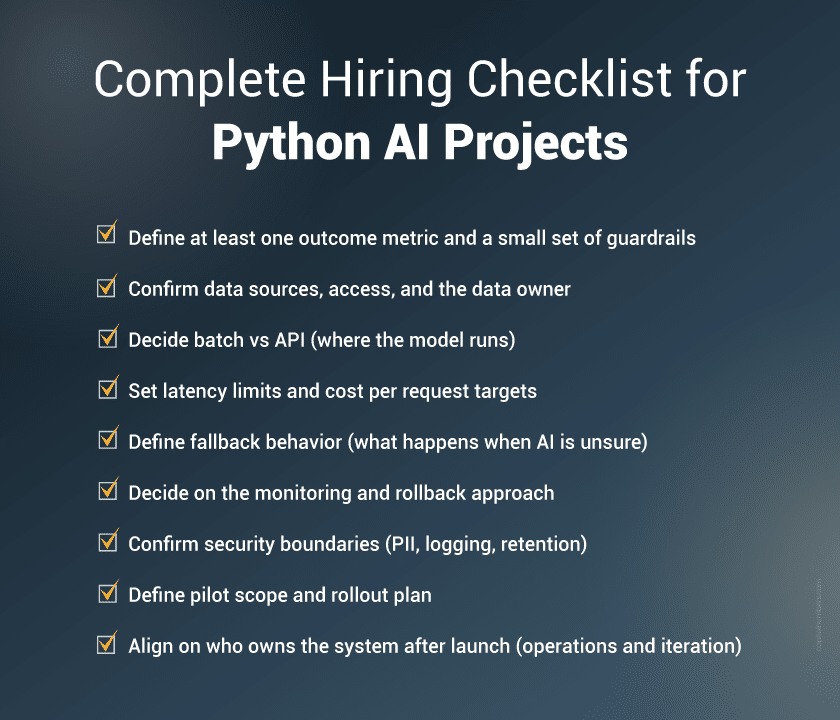

Hiring Checklist for Python AI Projects

How to Hire Python Developers for AI Projects?

Here’s a practical approach that keeps hiring aligned with business outcomes.

-

Start with the AI Delivery Scorecard

Use this scorecard to evaluate candidates (or vendors) quickly and effectively:

Category Criteria Score Range A. Applied AI Delivery Has shipped AI features into production; can explain metrics and tradeoffs 0–5 B. Data Competence Can handle messy data, validation, and versioning 0–5 C. Production Readiness Experience with APIs, deployment, monitoring, rollback, and understanding of drift and iteration 0–5 D. Security & Risk Thinking Understands LLM security basics (if GenAI is involved), privacy boundaries, and safe design 0–5 E. Communication Can explain AI decisions to non-technical stakeholders; writes clear docs to support stakeholder trust 0–5 A strong hire (or team) scores well across categories, not just in Python.

-

Ask for Proof That Matches Your Use Case

Instead of just asking for a portfolio, request tangible examples:

- A shipped AI solution relevant to your domain

- The baseline performance before iteration

- The improvements made post-iteration

- Monitoring practices used in production

- How they handled quality dips

This ensures alignment with real-world delivery, especially in environments where model drift is common.

-

Hire for the AI Lifecycle, Not Just the First Build

AI projects require continuous attention. Focus on candidates who understand that AI is a dynamic system that needs:

- Monitoring

- Updates

- Retraining (when required)

- Cost tuning

- Safety testing

This is why MLOps and lifecycle thinking are essential in AI development projects, ensuring production-readiness beyond the initial build.

You May Also Read: Deciding Between C# and Python: Which Suits Your Project Better?

Bottom Line

AI projects underperform when hiring focuses on Python syntax instead of end-to-end AI delivery. A model running in a demo is easy. The actual work starts when it has to run inside your product, handle messy data, stay accurate over time, and stay within budget.

So when you hire, focus on output. If your goal is AI development with Python, look beyond basic coding and assess Python AI developer skills such as data handling, evaluation, deployment readiness, and monitoring. This is what separates strong Python AI specialists from people who only know tools on paper.

When you hire Python AI developers who can take AI from build to production, you avoid rework, protect timelines, and get results that keep improving after launch.

If you want AI that is built to ship and run reliably, Capital Numbers can help you hire the right team and deliver production-ready AI. Want to hire Python developers for AI projects? Book a 30-minute discovery call to discuss scope, data, metrics, and team structure!